New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[dev.icinga.com #9208] Monitoring Module does not take multiple instance names into account #1633

Comments

|

Updated by mfriedrich on 2015-04-30 15:56:32 +00:00

|

|

Updated by tgelf on 2015-05-01 10:04:05 +00:00

Hi Michael, I'd like to let this open / use this ticket for farther discussion if you agree. Our plans are to silently fade out / deprecate support for multiple instances. This is an ancient feature thought to solve the "I have multiple monitoring nodes and want to see all objects in one place". For environments with multiple instances we are trying to hide the fact that there are such. As you noted, all objects are merged, we try to do as if there was only one instance in a least-effort way. In practice "instances" had a couple of issues:

I completely agree with you in two of your major points: IF there are multiple instances AND you configured multiple "command instances", they MUST be respected when sending commands. And "monitoring health" MUST be aware of multiple instances. If there are no issues for those two problems yet we should create them. My love and support for multiple instances ends here. From a technical point of view in Icinga Web 2 it would be easier as ever before to provide a correct and complete "multiple instance implementation". However I'm currently not willing to do so. If you have a good real-world usecase for them please let me know, I'm always open for new input enlarging my horizon. But let me first explain our farther plans:

To sum it all up: we well support multiple backends, we do not like "instances" as they exist right now. We must support existing ones to get them working as expected (commands) and to highlight issues in such environments (health). More related ideas and suggestions for improvement are highly appreciated. Cheers, |

|

Updated by mfriedrich on 2015-05-01 11:01:27 +00:00

Hi Tom, I'm totally fine with this explanation. I was just a little irritated when discussing that with Alex (basically the monitoring health overview showing the instance health) not finding this implemented afterall. Having two or more instances inside the database makes the data select in the monitoring health confusing. Although this should be hidden from the users - the only thing I'd like to see is the version of the backend (helps with problem support). From a technical point of view I fully agree that instance_id generates quite a lot of bloated implementation and problematic sql queries. Especially when IDO connects, selecting the right instance_id based on its configured name. 99% of the users never changed the instance_name from 'default' to something different, only in a multiple instances scenario. Or being a developer testing all capabilities wiht Core 1.x and Web 1.x. My primary concern is still that we've been building distributed environments based on that instance_id and instance_name columns in the past with Icinga Web 1.x. So I guess there exist certain installations with this kind of data integrity where instance_id is the only qualifier next to object_id to identify unique objects. Maybe not at customer projects (I've found different distributed assets there in the past 2 years) but generally speaking this was in the documentation mentioned all over. My guess is that users migrating from Web 1.x to Web 2.x will encounter this kind of problem somehow. So if that's the general strategy to go with multiple databases, please explain that in the documentation once this feature is fully implemented (no idea combo backends currently work). And it would certainly be nice to filter by "backend" then. Further add a note somewhere that instance_id is generally replaced by multiple backends and explain that over there too. A guide similar to the "Icinga 1.x vs Icinga 2.x" migration documentation which already exists will certainly help users (and colleagues) to help prepare a clean migration. I don't expect that to happen now though, but it would be nice to have such docs for the final 2.0.0 release somewhere :) PS: Multiple backends support was a long demanded feature for Web 1.x which was never fully implemented, and also confused with instance_id support (ask Jannis about his nightmares in #892). Kind regards, |

|

Updated by tgelf on 2015-05-01 13:24:26 +00:00

Hi Michael, great to hear that. As stated above, "health" MUST be instance-aware, so if it is not and there is no such issue it would be great if you could create one. I hope that our current implementation (given that we get multiple command instances working correctly together with a single idb connection) will satisfy most if not all existing web 1.x users. There will be no "filtering by backend" I guess as there will be no single view showing data from multiple backends. List views will of course have to provide some knob allowing you to switch environment. What we could also implement would be hard-coded filters per backend (implemented exactly like permissions, so not much coding involved) allowing you to pre-filter provided data. That way you could create multiple environments for multiple "legacy instances", each of them pre-filtered by instance. Not sure why one might want to do so, but it could be a workaround for those who believe to really need this feature. How to go on with this issue? I guess we should make sure to create dedicated issues for the remaining tasks, eventually link them to this one for farther information - and close this ticket as soon as all the others have been created. Cheers, |

|

Updated by mfriedrich on 2015-05-01 13:37:48 +00:00

|

|

Updated by mfriedrich on 2015-05-01 13:42:27 +00:00

|

|

Updated by mfriedrich on 2015-05-01 13:45:44 +00:00

|

|

Updated by mfriedrich on 2015-05-01 13:47:13 +00:00

Hi Tom, tgelf wrote:

If the command backends are linked to data backend resources, this should be fine. Afaik this check is implemented, and there also are some references to instance_id but it looks incomplete. For monitoring health itself see #9213 ... I did not update #9207 as this is only the program version which probably works fine. One thing which would make sense - check if there are multiple rows in the icinga_instances table, and add a warning during the setup wizard/monitoring health. See #9214

Sounds good to me :)

Well, it will help sort out issues if there's dead data in one of the interfaces. This is more of a maintenance thought, as we all know that monitoring environments see different admins, and at some point it might get confusing. It's not necessarily something we should have with 2.0.0 but would add a little more convenience on troubleshooting. But I would wait with that until users made their experience with that feature before actually implementing this visible to the user.

Works for me :) Others having the same "problem" might just find this issue and the entitled discussion/solution, Kind regards, |

|

Updated by jmeyer on 2015-05-05 11:27:55 +00:00

|

|

Updated by elippmann on 2015-07-15 12:17:37 +00:00

|

|

Updated by jmeyer on 2015-07-16 13:46:17 +00:00

|

|

Updated by jmeyer on 2015-08-18 11:45:19 +00:00

|

|

Updated by jmeyer on 2015-08-18 11:46:20 +00:00

|

|

Updated by elippmann on 2015-10-01 21:44:18 +00:00

|

|

Updated by elippmann on 2015-11-20 13:10:57 +00:00

|

|

Updated by elippmann on 2016-10-05 07:52:43 +00:00

Won't fix. |

This issue has been migrated from Redmine: https://dev.icinga.com/issues/9208

Created by mfriedrich on 2015-04-30 15:50:12 +00:00

Assignee: (none)

Status: Closed (closed on 2016-10-05 07:52:43 +00:00)

Target Version: (none)

Last Update: 2016-10-05 07:52:43 +00:00 (in Redmine)

General problem

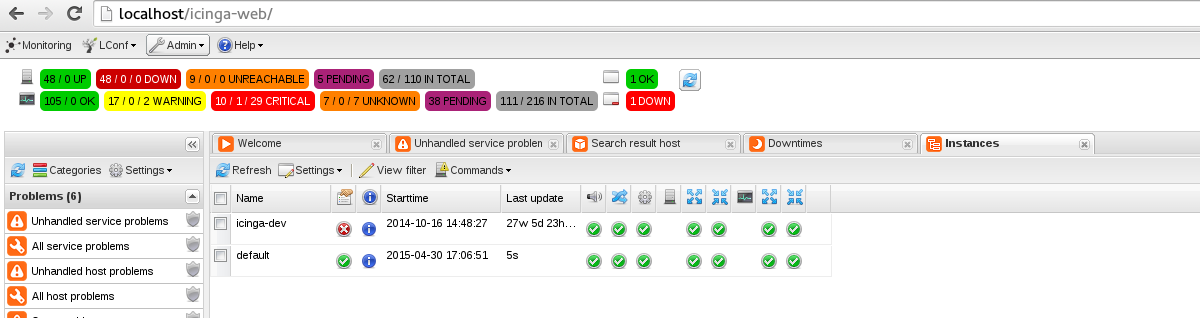

It seems that Icinga Web 2 is generally not aware of instances (instance_name, instance_id) and only shows one active instance.

I do have two instances configured (1.x and 2.x) but only the 2.x instance is taking into account for some strange reason.

Please explain how this is supposed to be working, and fix it accordingly to support multiple instances inside one (database/livestatus) backend.

Monitoring health summary

Only shows one instance.

The instance_id cannot be filtered either.

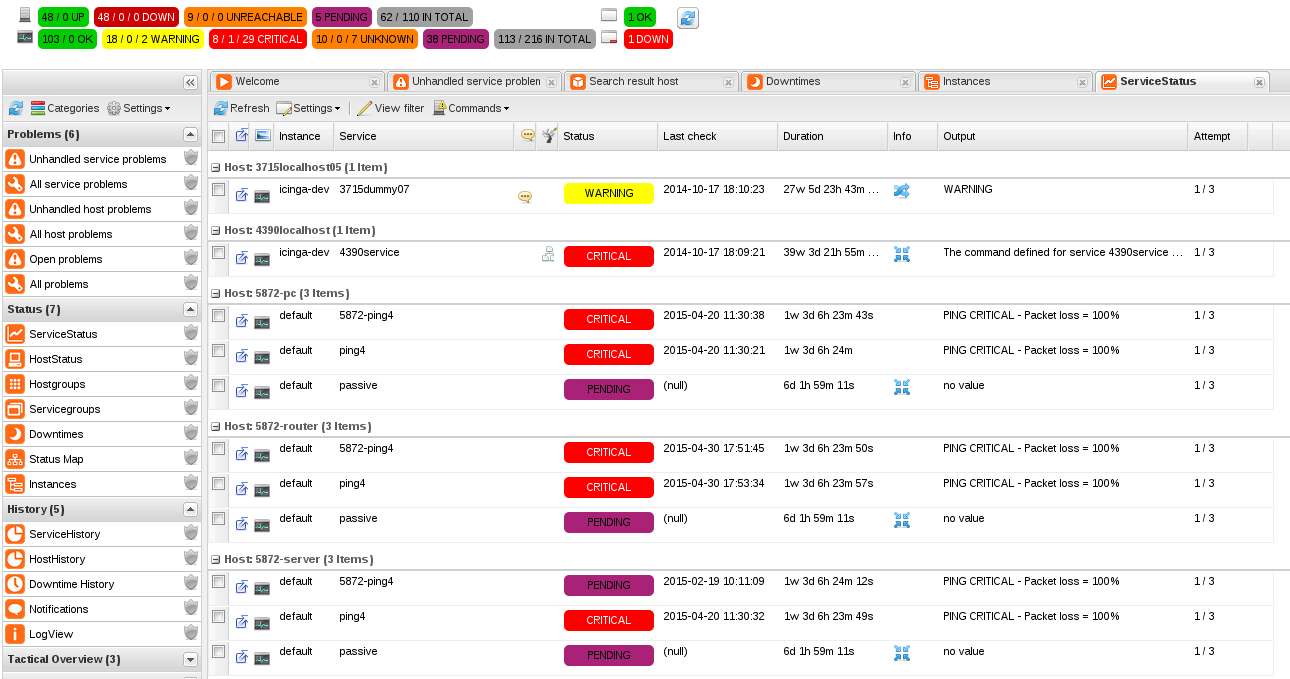

UI merges all instances

The web interface does show all hosts and services from both instances, even if 'icinga-dev' (my 1.x instance) is not running. The monitoring health view does not take that into account either.

Commands

When sending a command through the detail views, it should take the instance_id and instance_name into account and then select the command pipe by instance name. This can be configured through the gui/config but currently does not match. So it seems this is ignored.

Code

Seems it is not there.

Attachments

Subtasks:

Relations:

The text was updated successfully, but these errors were encountered: