[dev.icinga.com #2688] triggered downtimes for child hosts are missing after icinga restart #1006

Comments

|

Updated by mlucka on 2012-06-14 15:42:00 +00:00 I did not test this on earlier icinga versions, but nagios 3.0.6 and 3.2.1 doesn't have this issue. Maybe this information will help you a little bit while investigating... |

|

Updated by mfriedrich on 2012-06-14 15:45:43 +00:00 please provide some sample configs, as well as logs generated out of this. |

|

Updated by mlucka on 2012-06-14 15:48:58 +00:00 This could be the reason/solution: http://tracker.nagios.org/view.php?id=338 Found some seconds ago... |

|

Updated by mfriedrich on 2012-06-14 16:07:35 +00:00

no. nagios 3.4.x took an icinga patch, from 2 years ago which has been rewritten ever since in icinga upstream. icinga handles a restart (and therefore not being in effect downtime) differently, see common/downtime.c starting with that patch addresses hosts in downtime not being persistent anymore after restart. you are talking about child hosts triggered by the parent, which is a different story. so please provide your configs, and logs (plus debug logs in that special case) in order to see if your bug report is valid and reproducable. |

|

Updated by mlucka on 2012-06-15 14:54:43 +00:00

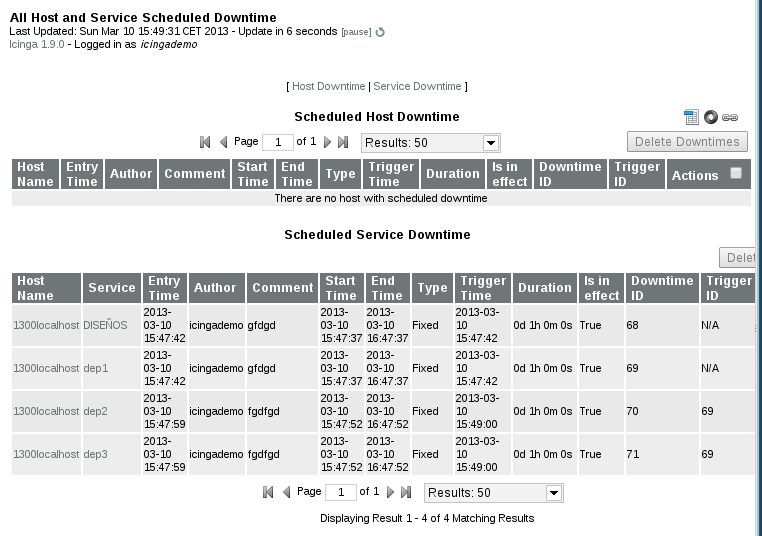

Hi, please find attached sample config, logs and screen shots... I reproduced the behavior as follows (debian squeeze, up2date, 32bit):

I think you can reproduce it by yourself easily. An icinga 1.6.2 installation was tested as well showing the same results. Best regards Michael |

|

Updated by mfriedrich on 2012-06-15 15:33:31 +00:00

thanks for the detailed report, i'll put it on my todo list after 1.7.1 is out, plus when i am a puppet master. |

|

Updated by mlucka on 2012-10-01 17:20:59 +00:00 Hi, is there any schedule available, when this issue could be fixed? Best regards Michael |

|

Updated by mfriedrich on 2012-10-02 08:06:32 +00:00

haven't had the time yet. hopefully others have - otherwise it will remain a todo. |

|

Updated by mfriedrich on 2012-10-24 18:37:05 +00:00

|

|

Updated by mfriedrich on 2012-10-30 20:10:35 +00:00

testing with f78e443 as latest commit. status_dat_before_first_stop retention_dat_after_first_stop status_dat_after_first_start retention_dat_after_second_stop status_dat_after_second_start retention_dat_after_third_stop |

|

Updated by mfriedrich on 2012-10-30 20:23:19 +00:00 so, as it's a bit late today - i can reproduce and see it, but i am not sure where this exactly is being hit, or ignored. might need some deep down debug sessions. |

|

Updated by mlucka on 2013-02-28 15:44:21 +00:00

Hallo, anbei die gesammelten Werke eines Kollegen, der von Nagios auf Icinga 1.7.1 (Debian 7) umzustellen versucht. Mit der Bitte um Prüfung und Integration des Patches. Grüße, Micha. beigefügt ein Patch, der das Downtime Problem bei Icinga Problem ist:

Zitat common/downtime.c:add_downtime

Die retention.dat/status.dat wird aber nur sequentiell gelesen Annahmen (des Patches):

Randbemerkung: Warum die Sortier-Funktion so programmiert war |

|

Updated by mlucka on 2013-02-28 16:41:49 +00:00 KORREKTUR Statt |

|

Updated by mfriedrich on 2013-03-04 19:58:27 +00:00 that looks like a hell of an idea, thanks. once i get a little more dev time, i'll try re-think and test it. |

|

Updated by mfriedrich on 2013-03-13 23:18:34 +00:00

|

|

Updated by mfriedrich on 2013-03-13 23:20:41 +00:00 used the wrong commit id |

|

Updated by mfriedrich on 2013-04-06 22:02:32 +00:00 are you able to test current git master/next? |

|

Updated by mfriedrich on 2013-04-10 18:48:09 +00:00

|

This issue has been migrated from Redmine: https://dev.icinga.com/issues/2688

Created by mlucka on 2012-06-14 15:39:21 +00:00

Assignee: mfriedrich

Status: Resolved (closed on 2013-04-10 18:48:09 +00:00)

Target Version: 1.9

Last Update: 2013-04-10 18:48:09 +00:00 (in Redmine)

Hi,

there's an issue on triggered downtime feature, seen on icinga 1.7.0 and nagios 3.2.3 and above...

triggered downtimes (type: fixed, child hosts: schedule triggered downtime for all child hosts) for child hosts will be delete during icinga restart. the downtime on the master host (parent) is not affected.

This should be easy to reproduce with just 2 hosts. If you need further information on that subject, don't hesitate to get touch with me.

Best regards

Michael

Attachments

Changesets

2012-10-30 20:12:47 +00:00 by mfriedrich 2b671f4

The text was updated successfully, but these errors were encountered: